HMICFRS Inspection Summary & Insights

An analysis of the latest HMICFRS fire service inspections, highlighting national trends, areas of decline, and what the findings mean for the sector.

8/26/20255 min read

Reflecting on the latest round of Fire and Rescue Service inspections

With HMICFRS recently having published the final reports from the 2023–25 round of Fire and Rescue Service (FRS) inspections (https://hmicfrs.justiceinspectorates.gov.uk/frs-assessments-year/2023-2025/), it feels like an appropriate moment to take stock. This latest set of reports gives us a national picture of performance, progress and persistent challenges across the sector, and allows some useful comparison with earlier inspection rounds.

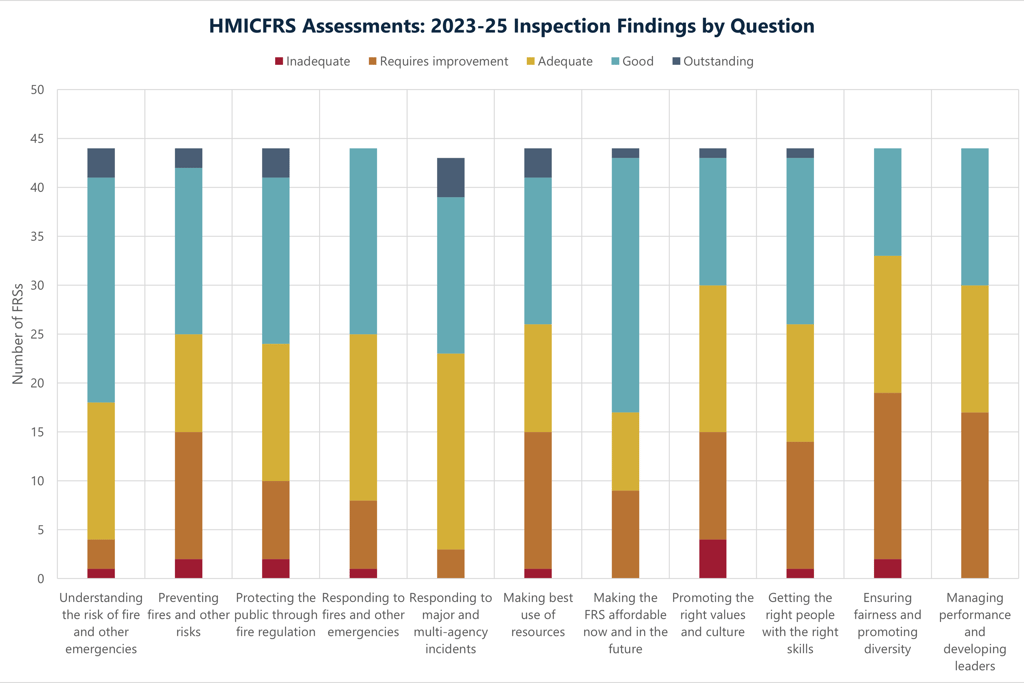

HMICFRS assesses each service against eleven core questions, grouped under the familiar pillars of Effectiveness, Efficiency and People. In the most recent round, the grading scale comprised five ratings: Outstanding, Good, Adequate, Requires Improvement, and Inadequate.

The addition of “Adequate” (absent from previous rounds) is one of a few changes in this cycle, and it has an impact on any year-on-year analysis. To enable comparison, I’ve applied a simple numerical scale

Outstanding = 5

Good = 4

Adequate (2023-25 only) = 3

Requires Improvement = 2

Inadequate = 1

There have also been some changes to the wording of the questions themselves, but for comparison purposes I’ve aligned them on a one-to-one basis with their earlier equivalents, using the 2023–25 wording in this summary.

The intention here is not to name-and-shame, or to rank individual services, but to explore the national trends and highlight where performance appears to be improving or where it may be slipping.

National trends in inspection scores

By assigning a score to each of the 44 FRSs across all eleven questions, and calculating a national average for each question, we can see which areas are typically stronger and which present more consistent challenges.

Some headline observations:

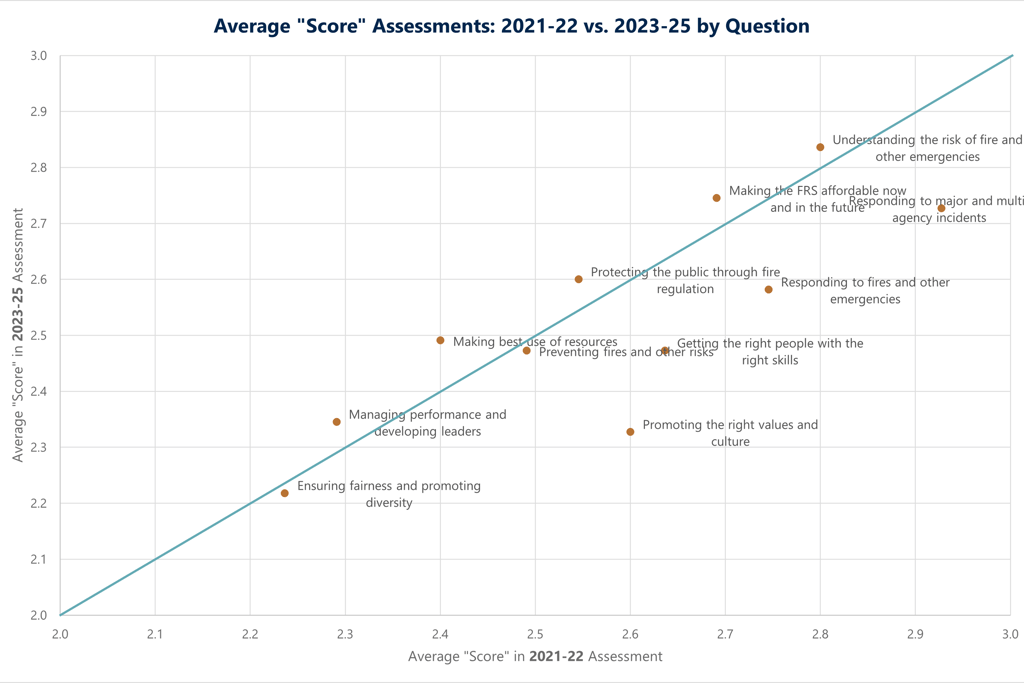

Seven of the eleven questions show very similar national averages between 2021–22 and 2023–25.

Four questions show a notable decline in average score over the two inspection rounds.

Across both years, “Ensuring fairness and promoting diversity” remains the lowest-scoring question, with an average of just 2.2, which equate to being marginally above Requires Improvement.

Here are the four areas where national averages have fallen most clearly:

Promoting the right values and culture: 2.60 (in 2021-22) to 2.33 (in 2023-25)

Getting the right people with the right skills: 2.64 to 2.47

Responding to fires and other emergencies: 2.75 to 2.58

Responding to major and multi-agency incidents: 2.93 to 2.73

What’s driving the decline in these areas?

Promoting the right values and culture

The drop in this area reflects a theme running through many recent HMICFRS reports: a concern that organisational culture is not changing quickly or consistently enough. While some services have made tangible progress in embedding values, others continue to face challenges such as:

Legacy behaviours or attitudes that conflict with stated values

Inconsistent application of codes of ethics or leadership behaviours

Staff not feeling confident to challenge poor behaviour or to raise concerns

In some FRSs, this has been compounded by the findings of high-profile cultural reviews and whistleblowing reports, which have added urgency but also exposed deeper-rooted issues.

Getting the right people with the right skills

This question is about workforce planning, skills mapping, training delivery and succession planning. Declining scores often reflect:

Limited visibility of current and future skills gaps

Difficulty in releasing staff for training because of operational pressures

Inconsistent assurance around competence (e.g. breathing apparatus, incident command)

Gaps in specialist roles, including prevention and protection

The shift to “Adequate” in some cases reflects that processes exist, but aren’t always robustly applied or evaluated.

Responding to fires and other emergencies

This is the operational core, and any downward movement here naturally attracts attention. HMICFRS has raised concerns in some services about:

Outdated risk information or delayed updates to site-specific plans

Mobilising systems not always reflecting risk-based cover

Availability issues, especially in predominantly on-call services

Inconsistent performance management of response standards

While many services perform well at individual incidents, the inspection focus is on assurance and consistency, not just capability on the day.

Responding to major and multi-agency incidents

This area tends to be tested less frequently in reality, which makes planning, exercising and assurance all the more important. Lower scores here often reflect:

Limited or infrequent exercising with partner agencies

Weaknesses in learning from national events or JESIP debriefs

Gaps in the governance and oversight of preparedness

Lack of clarity about roles under the Civil Contingencies Act

Some FRSs have also faced turnover in key resilience roles, which has disrupted continuity.

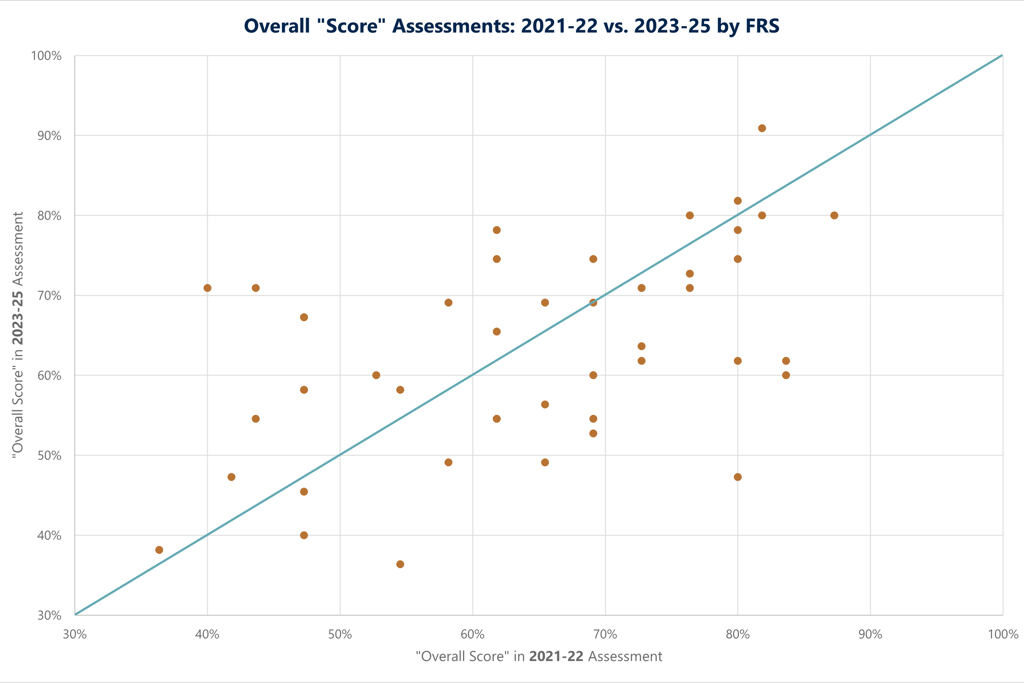

Overall FRS scores: stability, with some extremes

To create a simple national view, I’ve calculated an overall “score” for each FRS by summing the 11 question scores (using the 1–5 scale above) and converting that total into a percentage. An “all Outstanding” service would score 100%.

In 2021–22 scores ranged from 36% to 87%, with an average of 64%

In 2023–25 scores ranged from 36% to 91%, with an average of 63%

At a national level, that’s a picture of broad stability. However, when you look at individual services, some stark shifts emerge:

Most improved: one service rose from 40% in 2021–22 to 71% in 2023–25.

Most declined: another fell from 80% to 47% over the same period.

While the change in grading system (with the new “Adequate” band) may explain part of this, the broader pattern is telling. Significant improvement or deterioration usually reflects tangible organisational change, whether that’s leadership turnover, structural reform or the impact of major incidents.

What does all this mean?

These inspection results are not league tables, nor should they be treated as such. But they do provide a valuable barometer of how well FRSs are embedding good practice, learning from experience, and adapting to new challenges. Here are some key take-aways:

Culture and workforce-related themes are central to inspection but where national progress is slowest.

Operational capability remains strong in many areas, but assurance and resilience are under increasing scrutiny.

Improvement is possible, but usually takes sustained focus over multiple years, and can quickly reverse without it.

For services, the message from HMICFRS is increasingly consistent: it’s not enough to do the right things, you must also evidence and assure that you’re doing them well.

As ever, behind each percentage point is a story of leadership decisions, organisational culture and local context. If your service is looking to make sense of your own inspection outcomes, or to get ahead of the next round, an independent view can help turn data into direction.